[HCI] 10. Universal Design-Multi-Sensory Systems

Universal Design Principles - NCSU

- To guide a wide range of design disciplines including

environments, products, and communications

- Equitable use

- Flexibility in use

- Simple and intuitive to use

- Perceptible information

- Tolerance for error

- Low physical effort

- Size and space for approach and use

1. Equitable Use

- The design is useful and marketable to people with diverse abilities

Guidelines: 1a. Provide the same means of use for all users: identical whenever possible; equivalent when not.

1b. Avoid segregating or stigmatizing any users.

1c. Provisions for privacy, security, and safety should be equally available to all users.

1d. Make the design appealing to all users.

2. Flexibility in Use

- The design accommodates a wide range of individual preferences(선호도) and abilities.

- 유저가 초이스 할 수 있는 여지를 최대한 주자

Guidelines: 2a. Provide choice in methods of use. 2b. Accommodate right- or left-handed access and use. 2c. Facilitate the user’s accuracy and precision. 2d. Provide adaptability to the user’s pace.

3. Simple and Intuitive Use

- Use of the design is easy to understand, regardless of the user’s experience, knowledge, language skills, or current concentration level.

Guidelines: 3a. Eliminate unnecessary complexity. 3b. Be consistent with user expectations and intuition.

- 유저가 봤을 때 이렇게 되야 할 것 같다고 생각하면 실제로 그렇게 되도록 설계해야 한다.

3c. Accommodate a wide range of literacy and language skills. 3d. Arrange information consistent with its importance. 3e. Provide effective prompting and feedback during and after task completion.

4. Perceptible Information

- The design communicates necessary information effectively to the user, regardless of ambient conditions or the user’s sensory abilities.

Guidelines: 4a. Use different modes (pictorial, verbal, tactile) for redundant presentation of essential information. 4b. Provide adequate contrast between essential information and its surroundings. 4c. Maximize “legibility” of essential information. - 얼핏봐도 무슨 뜻인지 알도록 4d. Differentiate elements in ways that can be described (i.e., make it easy to give instructions or directions). 4e. Provide compatibility with a variety of techniques or devices used by people with sensory limitations.

5. Tolerance for Error

- The design minimizes hazards and the adverse consequences of accidental or unintended actions.

Guidelines: 5a. Arrange elements to minimize hazards and errors: most used elements, most accessible; hazardous elements eliminated, isolated, or shielded. 5b. Provide warnings of hazards and errors. 5c. Provide fail safe features. 5d. Discourage unconscious action in tasks that require vigilance.

6. Low Physical Effort

- The design can be used efficiently and comfortably and with a minimum of fatigue.

Guidelines: 6a. Allow user to maintain a neutral body position. 6b. Use reasonable operating forces. 6c. Minimize repetitive actions. 6d. Minimize sustained physical effort.

7. Size and Space for Approach and Use

- Appropriate size and space is provided for approach, reach, manipulation, and use regardless of user’s body size, posture, or mobility

Guidelines: 7a. Provide a clear line of sight to important elements for any seated or standing user. 7b. Make reach to all components comfortable for any seated or standing user. 7c. Accommodate variations in hand and grip size. 7d. Provide adequate space for the use of assistive devices or personal assistance.

UD Principle’s Coverage

- Principles of Universal Design address only universally usable design, while the practice of design involves more than consideration for usability.

- Designers must also incorporate other considerations such as economic, engineering, cultural, gender, and environmental concerns in their design processes.

- These Principles offer designers guidance to better integrate features that meet the needs of as many users as possible.

Multi-Sensory Systems: Beyond UD in HCI

- More than one sensory channel in interaction

- e.g. sounds, text, hypertext, animation, video, gestures, vision

- Used in a range of applications:

- Particularly good for users with special needs, and virtual reality

- Will cover

- General terminology

- Speech

- Non-speech sounds

- Handwriting

- Considering applications as well as principles

Usable Senses

- The 5 senses (sight, sound, touch, taste and smell) are used by us every day

- Each is important on its own

- Together, they provide a fuller interaction with the natural world

- Computers rarely offer such a rich interaction

- Can we use all the available senses?

- Ideally – yes

- Practically – no

- We can use • sight • sound • touch (sometimes)

- We cannot (yet) use • taste • smell

Multi-modal vs. Multi-media

- Multi-modal systems

- Use more than one sense (or mode) of interaction

- e.g. visual and aural senses: a text processor may speak the words as well as echoing them to the screen

- Multi-media systems

- Use a number of different media to communicate information

- e.g. a computer-based teaching systemmay use video, animation, text and still imagesdifferent media all using the visual mode of interactionmay also use sounds, both speech and non-speechtwo more media, now using a different mode

Speech

- Human beings have a great and natural mastery of speech

- Makes it difficult to appreciate the complexities but it’s an easy medium for communication

Structure of Speech

- Phonemes

- 40 of them

- Basic atomic units

- Sound slightly different depending on the context they are in, these larger units are …

- Allophones

- All the sounds in the language

- Between 120 and 130 of them

- These are formed into …

- Morphemes

- Smallest unit of language that has meaning.

Other Speech terminology

- Prosody

- Alteration in tone and quality

- Variations in emphasis, stress, pauses and pitch

- Impart more meaning to sentences.

- Co-articulation

- The effect of context on the sound

- Transforms the phonemes into allophones

- Syntax – structure of sentences

- Semantics – meaning of sentences

Speech Recognition Problems

-

Different people speak differently:

- Accent, intonation, stress, idiom, volume, etc.

-

The syntax of semantically similar sentences may vary.

-

Background noises can interfere.

-

People often “ummm…..” and “errr…..”

-

Words not enough - semantics needed as well

-

Requires intelligence to understand a sentence

-

Context of the utterance often has to be known

-

Also information about the subject and speake

e.g. even if “Errr…. I, um, don’t like this” is recognised, it is a fairly useless piece of information on it’s own

-

The Phonetic Typewriter

- Developed for Finnish (a phonetic language, written as it is said)

- Trained on one speaker, will generalise to others.

- A neural network is trained to cluster together similar sounds, which are then labelled with the corresponding character.

- When recognising speech, the sounds uttered are allocated to the closest corresponding output, and the character for that output is printed.

- Requires large dictionary of minor variations to correct general mechanism

- Noticeably poorer performance on speakers it has not been trained on

The Phonetic Typewriter Examples

Speech Recognition: Useful?

- Single user or limited vocabulary systems e.g. computer dictation

- Open use, limited vocabulary systems can work satisfactorily e.g. some voice activated telephone systems

- general user, wide vocabulary systems … … still a problem

- Great potential, however

- When users hands are already occupied e.g. driving, manufacturing

- For users with physical disabilities

- Lightweight, mobile devices

Speech Synthesis

- The generation of speech

- Useful

- Natural and familiar way of receiving information

- Problems

- Similar to recognition: prosody particularly

- Additional problems

- Intrusive - needs headphones, or creates noise in the workplace

- Transient - harder to review and browse

Speech Synthesis: Useful?

- Successful in certain constrained applications

when the user:

- Is particularly motivated to overcome problems

- Has few alternatives

- Examples:

- Screen readers

- Read the textual display to the user utilised by visually impaired people

- Warning signals

- Spoken information sometimes presented to pilots whose visual and haptic skills are already fully occupied

- Screen readers

Non-Speech Sounds

- Examples: boings, bangs, squeaks, clicks etc.

- Commonly used for warnings and alarms

- Evidence to show they are useful

- Fewer typing mistakes with key clicks

- Video games harder without sound

- Language/culture independent, unlike speech

Non-Speech Sounds: Useful?

- Dual mode displays:

- Information presented along two different sensory channels

- Redundant presentation of information

- Resolution of ambiguity in one mode through information in another

- Sound good for

- Transient information

- Background status information

- e.g. Sound can be used as a redundant mode in the computer operating systems; almost any user action (file selection, window active, disk insert, search error, copy complete, etc.) can have a different sound associated with it

Auditory Icons

- Use natural sounds to represent different types of object or action

- Natural sounds have associated semantics which can be mapped onto similar meanings in the interaction

- e.g. throwing something away ~ the sound of smashing glass

- Problem: not all things have associated meanings

- Additional information can also be presented:

- Muffled sounds if object is obscured or action is in the background

- Use of stereo allows positional information to be added

SonicFinder for the Macintosh (1989)

- Items and actions on the desktop have associated sounds

- Folders have a papery noise

- Moving files – dragging sound

- Copying – a problem … sound of a liquid being poured into a receptaclerising pitch indicates the progress of the copy

- Big files have louder sound than smaller ones

https://vimeo.com/158610127

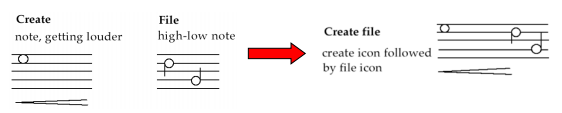

Earcons

- Synthetic sounds used to convey information

- Structured combinations of notes (motives) represent actions and objects

- Motives combined to provide rich information

- Compound earcons

- Multiple motives combined to make one more complicated earcon

Earcons (ctd)

- Family earcons

- Similar types of earcons represent similar classes of action or similar objects: the family of “errors” would contain syntax and operating system errors

- Earcons easily grouped and refined due to compositional and hierarchical nature

- Harder to associate with the interface task since there is no natural mapping

Touch

- Haptic interaction

- Cutaneous perception

- Tactile sensation; vibrations on the skin

- Kinesthetics

- Movement and position; force feedback

- Cutaneous perception

- Information on shape, texture, resistance, temperature, comparative spatial factors

- Example technologies

- Electronic braille displays

- Force feedback devices

- Phantom OMNI

- VR haptic globes

Handwriting Recognition

- Handwriting is another communication mechanism which we are used to in day-to-day life

- Technology

- Handwriting consists of complex strokes and spaces

- Captured by digitising tablet

- Strokes transformed to sequence of dots

- Large tablets available

- Suitable for digitising maps and technical drawings

- Smaller devices, some incorporating thin screens to display the information

- Smartphones

- Tablet PCs

Handwriting Recognition (ctd)

- Problems

- Personal differences in letter formation

- Co-articulation effects

- Breakthroughs:

- Stroke not just bitmap

- Special ‘alphabet’ – Graffiti on PalmOS

- Current state:

- Usable – even without training

- But many prefer keyboards!

Gesture

- Applications

- Gestural input - e.g. “put that there”

- Sign language

- Technology

- Data glove

- Position sensing devices e.g MIT Media Room

- Benefits

- Natural form of interaction - pointing

- Enhance sign language communication between signing and non-signing users

- Problems

- User dependent, variable and issues of coarticulation

Users with disabilities

- Visual impairment

- Screen readers, SonicFinder

- Hearing impairment

- Text communication, gesture, captions

- Physical impairment

- Speech I/O, eyegaze, gesture, predictive systems (e.g. Reactive keyboard)

- Speech impairment

- Speech synthesis, text communication

- Dyslexia

- Speech input, output

- Autism

- Communication, education

… plus …

- Age groups

- Older people e.g. disability aids, memory aids, communication toolsto prevent social isolation

- Children e.g. appropriate input/outputdevices, involvement in design process

- Cultural differences

- Influence of nationality, generation, gender, race, sexuality, class, religion, political persuasion etc. on interpretation of interface features

- e.g. interpretation and acceptability of language, cultural symbols, gesture and colour

댓글남기기