[OS] Thread

[toc]

Chapter 4: Multithreaded Programming

- Overview

- Multicore Programming

- Multithreading Models

- Thread Libraries

- Implicit Threading

- Threading Issues

- Operating System Examples

- Windows XP Threads

- Linux Threads

- Java Threads

Objectives

- To introduce the notion of a thread

- a fundamental unit of CPU utilization(CPU 활용의 기본 단위) that forms the basis of multithreaded computer systems

- To discuss the APIs for the Pthreads, Win32, and Java thread libraries

- To explore several strategies that provide implicit threading

- To examine issues related to multithreaded programming

- To cover operating system support for threads in Windows and Linux

Motivation

- Most modern applications are multithreaded

- Threads run within application

- Many s/w packages that run on modern desktop PCs are multithreaded

- An application is implemented as a separate process with several threads of control

- concurrently 실행!!!!!!!

- A web browser might have one thread display images or text

- While another thread retrieves(검색) data from network

- And another update display

- Answer a network request

- Word processor may have a thread for displaying graphics

- Another thread for reading keystrokes

- Another for performing spelling checking in the background

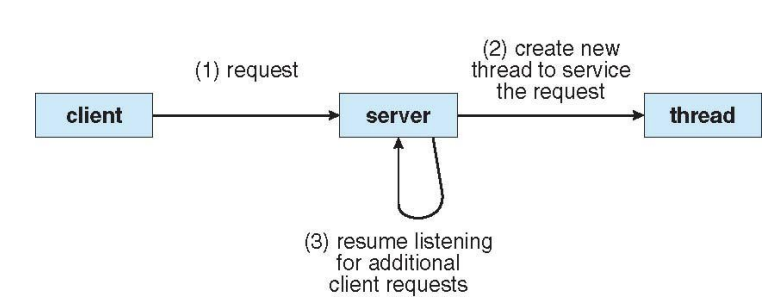

- Web server

- A web server accepts client requests for web pages

- A busy web server may have several clients concurrently accessing

- RPC (Remote Procedure Call)

- RPC severs are multithreaded

Thread - motivation

- Process creation is heavy-weight while thread creation is light-weight

- Can simplify code, increase efficiency

- OS Kernels are also generally multithreaded

Multithreaded Server Architecture

Threads

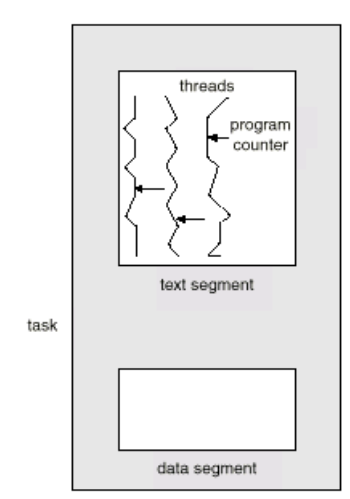

- Process is an executing program with a single thread of control

-

Modern OS allows for a process to contain multiple threads of control

- A thread (or lightweight process) is a basic unit of CPU utilization; it consists of:

- Thread ID, program counter

- register set

- stack space

- A thread shares with its peer threads its: (시험)

- code section

- data section

- operating-system resources such as open files and signals

- A traditional or heavyweight process is equal to a task with one thread

Multiple Threads within a Task

- In a multiple threaded task, while one server thread is blocked and waiting, a second thread in the same task can run.

- Cooperation of multiple threads in same job confers higher throughput and improved performance.

- Applications that require sharing a common buffer (i.e., producer-consumer) benefit from thread utilization.

- Threads provide a mechanism that allows sequential processes to make blocking system calls(ex. syscall) while also achieving parallelism.

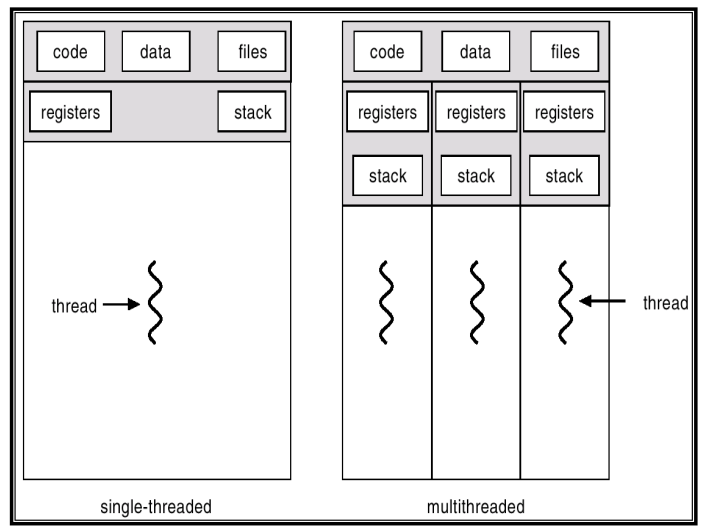

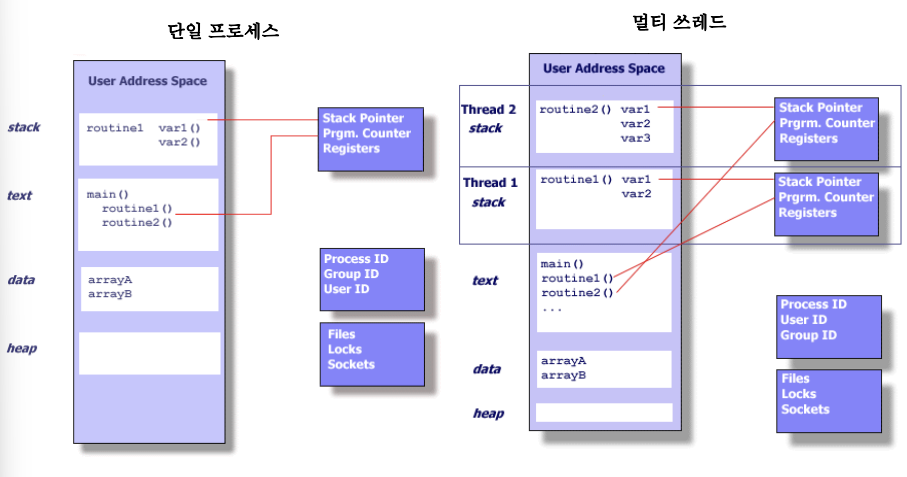

Single and Multithreaded Processes

- code, data, files는 여러 프로세스가 생겨도 공유된다.

- 하지만 각 프로세스 별로 실행되는 내용은 다르기 때문에 registers와 stack은 따로 따로 갖게 된다.(시험)

Benefits of multithreaded programming

- Responsiveness (시험)

- Allow a program to continue running even if part of it is blocked or is performing a lengthy operation

- Multithreaded web browser allow user interaction in one thread while an image is being loaded in another thread

- Resource Sharing

- Threads share the memory and resources of the process

- threads share resources of process, easier than shared memory or message passing

- Threads make IPC easier

- Code sharing allows an application to have several threads within same address space

- Economy

- Allocating resources for process creation is costly

- More economical to create and context switch threads (resource sharing)

- In solaris 2, creating process is about 30 times slower than is creating a thread, context switching is about 5 times slower

- Scalability

- process can take advantage of multiprocessor architectures Utilization of MP Architectures

- Benefits of multiprogramming can be greatly increased in MP architecture, where each thread may be running in parallel on a different processor

- A single threaded process can only run on one CPU, no matter how many are available

- Multithreading on a multi-CPU machine increases concurrency

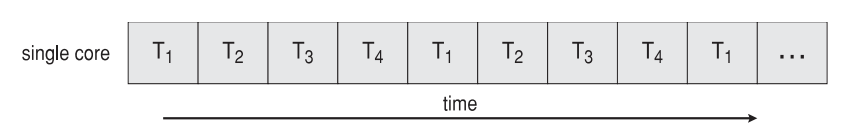

- In a single-processor architecture, the CPU moves between each thread as to create an illusion of parallelism, but in reality only one thread is running at a time (pseduo parallel)

Thread of control 이 1개라면

① ② ③ ④

=> 와 같이 도는데 (1번이 실행되는 동안 아래는 실행이 안 됨)

Thread of control 이 많다면

① ② ③ ④

=> 와 같이 돌 수 있다. (concurrency 보장)

- 사용자 입장에서 1번을 실행하면서 다른 2,3,4,번도 실행되는게 눈에 보임

Multicore Programming

- Single CPU -> multi CPU -> multi-core CPU on single CPU chip

- Each core appears as a separate CPU to OS (4core -> OS가 보기에 4CPU)

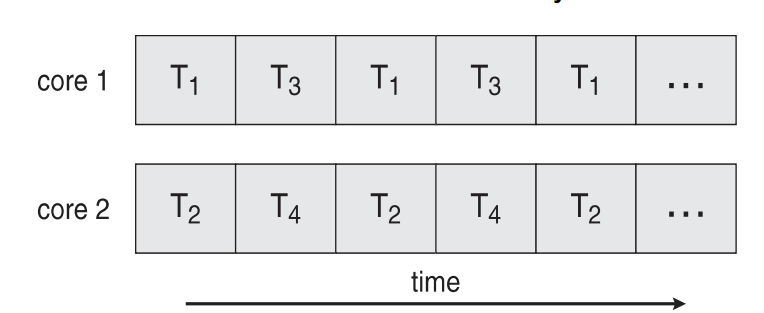

- Multi-threaded programming can provide Improved concurrency on multi-core system

- Concurrency means Parallelism

- a system can perform more than one task simultaneosly

- Concurrnecy means pseudo-parallelism

- supports more than one task making progress (계속해서 진전이 이루어진다는 점)

- Single processor / core, (OS)scheduler providing concurrency

- Concurrency means Parallelism

- Multicore systems putting pressure on programmers, challenges include:

- Dividing activities - identify task that can run in parallel on individual core.

- Each task should be independent to be executed on parallel

- Balance (한 core가 너무 많은 하지 않도록 load를 골고루 분배하는 것)

- Data splitting

- Data dependency - when one task depends on data from another, execution of tasks should be synchronized to accommodate data dependency

- Testing and debugging

- Dividing activities - identify task that can run in parallel on individual core.

-

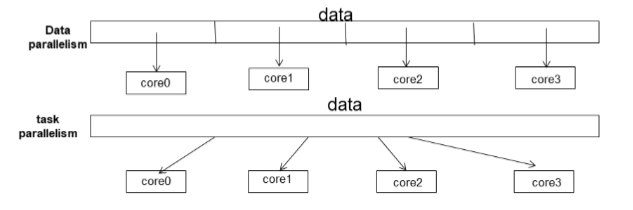

Parallelism implies a system can perform more than one task simultaneously

- Types of parallelism

- Data parallelism - distributes subsets of the same data across multiple cores, same operation on each (시험)

- 1부터 100을 더하라고 했다면 학생1은 1~10까지 더하고 학생2는 11~20까지 더하는 예와 같음

- core가 실행하는 프로그램은 같은데 수행하는 데이터가 다르다

- Task parallelism - distributing threads across cores, each thread performing unique operation

- 같은 데이터에 대해서 다른 task를 수행하는

- Data parallelism - distributes subsets of the same data across multiple cores, same operation on each (시험)

- As # of threads grows, so does architectural support for threading

- CPUs have cores as well as hardware threads

- Consider Oracle SPARC T4 with 8 cores, and 8 hardware threads per core (OS관점 64개 CPU가 있다고 생각)

Concurrency vs. Parallelism

- Concurrent execution on single-core system:

- Parallelism on a multi-core system:

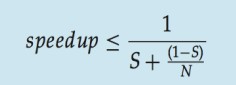

Amdahl’s Law

- Identifies performance gains from adding additional cores to an application that has both serial and parallel components

- S is serial portion (병렬 불가능한 것의 비율)

- 1-S is 병렬할 수 있는

- N processing cores

-

I.e. if application is 75% parallel / 25% serial, moving from 1 to 2 cores results in speedup of 1.6 times, 2.28 times with 4 cores

-

N = 1: speedup = 1

- No speedup

-

N = 2: speedup = 1.6

-

As N approaches infinity, speedup approaches 1 / S

- if 50% serial, max speedup is 2.0

Serial portion of an application has disproportionate effect on performance gained by adding additional cores

-

But does the law take into account contemporary multicore systems?!!!!!!!! (시험)

User Threads and Kernel Threads

- User threads - management done by user-level threads library

- No kernel support

- Three primary thread libraries:

- POSIX Pthreads

- Windows threads

- Java threads

- Kernel threads - Supported by the Kernel(OS)

- Examples – virtually all general purpose operating systems, including:

- Windows

- Solaris

- Linux

- Tru64 UNIX

- Mac OS X

User Level Threads

- Thread management done by user-level threads library

- Three primary thread libraries:

- POSIX Pthreads

- Win32 threads

- Java threads

- supported above the kernel, via a set of thread library calls at the user level (Project Andrew from CMU).

- The library supports for thread creation, scheduling in user space with no kernel support

- Kernel is unaware of user-level threads, so fast

- Drawbacks

- If the kernel is single threaded, then any user-level thread performing a blocking system call will cause the entire process to block, even if other threads are available to run within the application

- Drawbacks

Kernel Threads

- Kernel-supported Threads Supports for threads is provided by OS

- The kernel performs thread creation, scheduling in kernel space

- 단점: Generally slower to manage threads than user thread

- 장점: But, if a thread performs a blocking system call, the kernel can schedule another thread for execution

- In MP environment, the kernel can schedule threads on different processors

- Examples – virtually all general purpose operating systems, including:

- Windows XP/2000, Solaris, Linux, Tru64 UNIX, Mac OS X

- Hybrid approach implements both user-level and kernel-supported threads (Solaris 2).

- Java thread on JVM

User Thread와 Kernel Thread가 다른 점

- User thread는 thread library에 의해 thread가 관리되지만 Kernel thread는 OS에 의해 관리된다.

- 스레드가 blocking system call을 수행할 때 User thread는 전체 process가 block되지만 Kernel thread는 또실행 중인 다른 스레드를 스케줄링 할 수 있다.

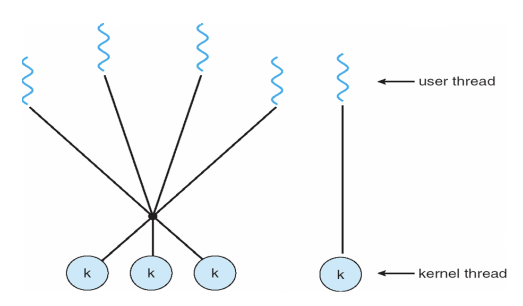

Multithreading Models

-

Many systems provide support both user and kernel threads, resulting in different multithreading models

- Many-to-One

- One-to-One

- Many-to-Many

Multithreading Models

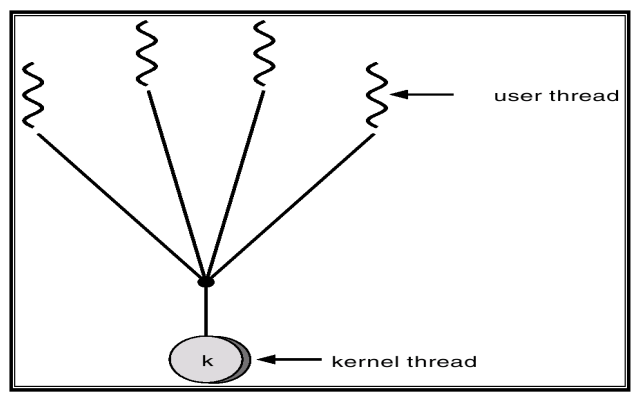

Many-to-One

- Many user-level thread mapped to single kernel thread.

- 오직 하나의 kernel thread에 맵핑!

- Thread management is done in user space, so efficient

- 커널 부담이 없는 것이 장점

- Because only one thread can access the kernel at a time, multiple threads are unable to run in parallel on MPs (시험)

- Multiple threads may not run in parallel on muticore system because only one may be in kernel at a time

- Allows the developer to create as many user threads, however, true concurrency is not gained because kernel can schedule only one thread at a time

- Overhead - but entire process will block if a thread makes a blocking system call

- One thread blocking causes all to block

- Used on systems that do not support kernel threads (user-level thread libraries) – Few systems currently use this model

- Examples:

- Solaris Green Threads

- GNU Portable Threads

Multithreading Models: Many-to-One Model

- 4개의 thread 중 어느 thread가 실행되기 위해서 하나의 kernel thread에 할당이 되어야 한다.

- 그러면 kernel은 하나가 돌든 두개가 돌든 전체의 user thread를 하나의 thread로 간주하게 된다.

- kernel thread가 하나의 single thread이면 위의 4개의 user thread 중 하나만 block이 되더라도 나머지가 다 block이 되어 버리게 되는 문제가 있을 수 있다.

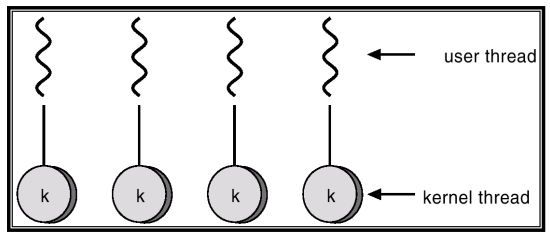

One-to-one Model

-

many-to-one보다 concurrency가 극대화

- Each user-level thread maps to kernel thread.

- Creating a user-level thread creates a kernel thread

- provides more concurrency than many-to-one model by allowing another thread to run when a thread makes a blocking system call or by allowing multiple threads to run in parallel (concurrently) on MPs

- Disadv.

- -> creating a user thread requires creating the corresponding kernel thread,

- 커널의 부담이 크다. user level thread의 갯수만큼 thread of control을 요구한다.

- -> Number of threads per process sometimes restricted due to overhead

- 그 갯수를 지원해 주는데 한계가 있기 때문에 mapping이 불가능한 경우가 생길 수 있다.

- -> creating a user thread requires creating the corresponding kernel thread,

- Examples

- Windows NT/XP/2000, Linux, Solaris 9 and later, OS/2

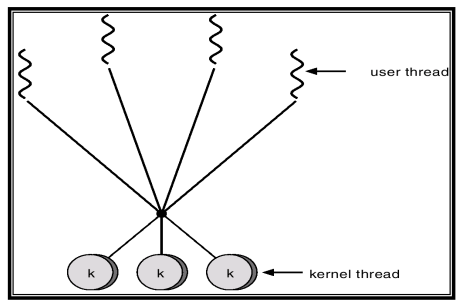

Many-to-Many Model

- one-to-one과 many-to-many의 장단점을 섞은 model

- ono-to-one을 쓰고 싶은데 여기서 커널의 부담을 줄이는

- Allows many user level threads to be mapped to a smaller or equal number of kernel threads.

- 유저의 모든 thread를 받아들이는 것이 아니라 kernel의 입장에서 무리가 가지 않는 수만큼의 thread를 지원.

- 작거나 같은 숫자만큼만.

- 유저의 모든 thread를 받아들이는 것이 아니라 kernel의 입장에서 무리가 가지 않는 수만큼의 thread를 지원.

- Allows the operating system to create a sufficient number of kernel threads.

- In

many-to-one model, true concurrency is not gained because kernel can schedule only one thread at a time - One-to-one model allows greater concurrency, but, overhead of creating kernel threads can burden the performance of an application

- Solaris prior to version 9

- Windows NT/2000 with the ThreadFiber package

- Solaris 2, IRIX, HP-UX, True64 Unix

Two-level Model

- many-to-one + one-to-one

- Similar to M:M, except that it allows a user thread to be bound to kernel thread

- 중요한 thread는 100% mapping을 보장시켜주고 나머지 중요하지 않은 thread는 many-to-one으로 mapping

- Examples

- IRIX

- HP-UX

- Tru64 UNIX

- Solaris 8 and earlier

Thread Libraries

- Thread library provides programmer with API for creating and managing threads

- Two primary ways of implementing

- Library entirely in user space

- Kernel-level library supported by the OS

Pthreads

- May be provided either as user-level or kernel-level

- A POSIX standard (IEEE 1003.1c) API for thread creation and synchronization

- A specification for thread behavior, not an implementation

- API specifies behavior of the thread library, implementation is up to development of the library.

-

Common in UNIX operating systems (Solaris, Linux, Mac OS X)

- 유저레벨에도 가능, 커널레벨에도 가능

- pthreads는 인터페이스는 맞추지만, 구현상은 알아서 하라고 하는 것 => 규격. input, output, 와꾸만 맞추는 것

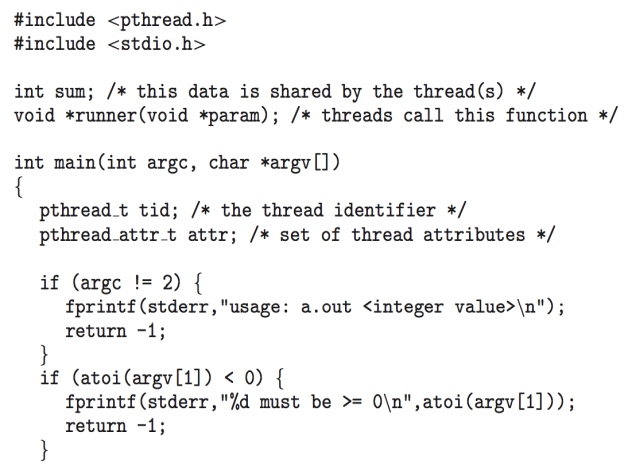

Pthreads Example

-

pthread_create: fork()와 유사한

-

pthread_join: wait()과 유사한

-

OS가 제공하는 system call과 / 구현을 누가했냐의 차이지 같은 개념이다.

- pthread_attr : attribute를 표현한 자료구조

- pthread_attr_init(&attr) => attribute를 default 값으로 설정해줘

- pthread_create() : &tid -> 여기에 스레드 아이디 좀 써줘 (받는 것)

- &attr : 스레드에게 attr 값들을 넘겨주는 것 (주는 것)

- runner : 함수 -> 실제로 도는 함수

- argv[1] : 넘겨주는 param

- pthread_join() : wait()와 기본적으로 같지만, 이건 library가 제공하는 API

- wait()는 System call

- pthread_exit() : library가 제공하는 API

- exit() : System call

- 하는 일은 같다

(시험) 코드 돌려보기

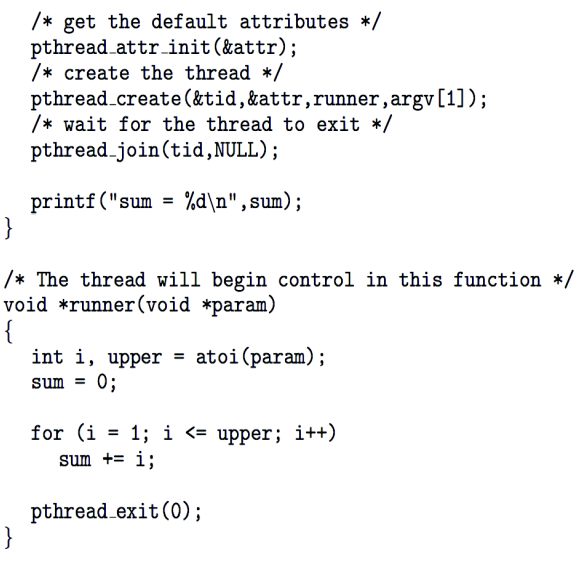

Pthreads Code for Joining 10 Threads

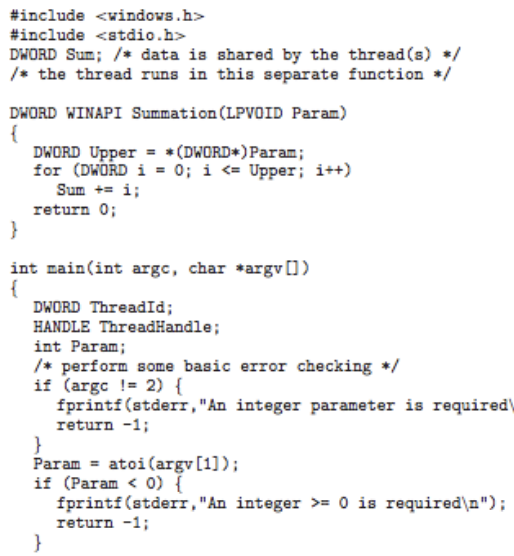

Win32 API Multithreaded C Program

- CreateThread

- WaitForSingleObject

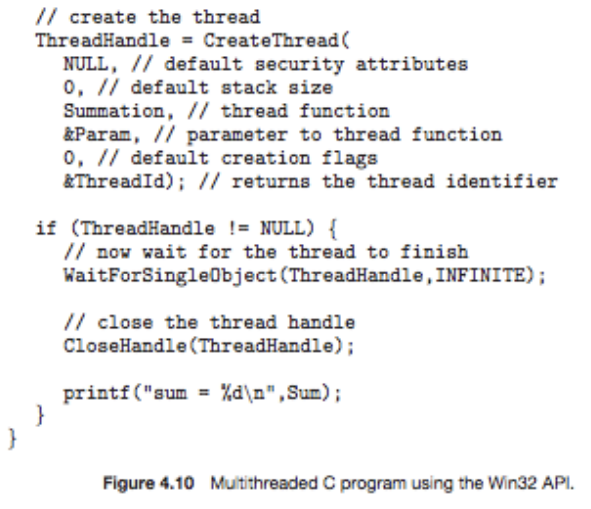

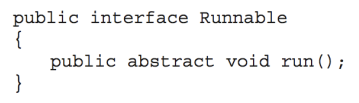

Java Threads

- Most PL(Programming Language) do not enable programmers to specify concurrent activities except Ada

- Generally concurrency has been implemented as OS primitives

- C , C++ programs can perform multithreading by using platform-specific code libraries

- Java provide supports at the language level

- threads are managed by the JVM not by user library or kernel.

- 자바는 JVM에 의해 관리되기 때문에 kernel이나 library에 종속적이지 않다.

- 그러나 OS에 의해 실행되기 때문에 JVM 자체는 하나의 kernel thread로 mapping 되기 때문에 종속적이다.

- Typically implemented using the threads model provided by underlying OS

- Java threads may be created by: (시험 X)

- (1) Extending Thread class - creating a new class that is derived from the Thread class, and to override the run method of the Thread class

- (2) Implementing the Runnable interface

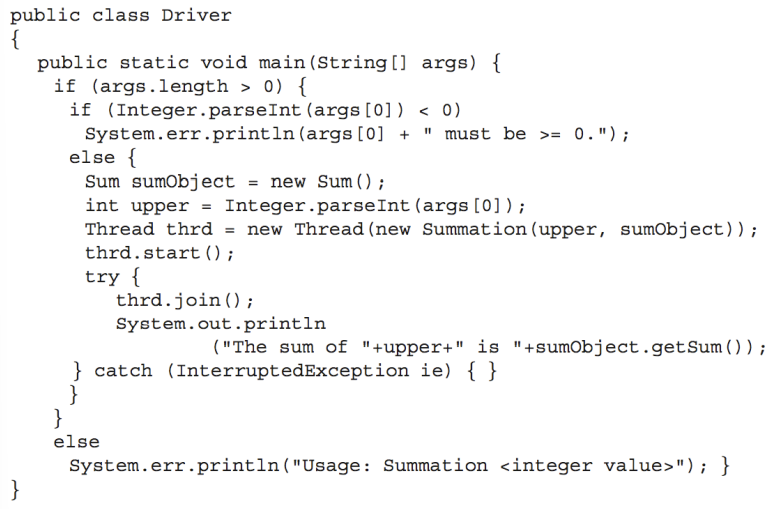

Multi-threaded program for Summation of a non-negative integer

class Summation extends Thread

{

public Summation (int n) {

upper = n;

}

public void run ( ) {

int sum = 0;

if ( upper > 0 ) {

for ( int i = 1; i <= upper; i++)

sum += i ;

}

System.out.println (“the sum of “+upper+” is “ +sum);

}

private int upper;

}

class Summation extends Thread

{

public Summation (int n) {

upper = n;

}

public void run ( ) {

int sum = 0;

if ( upper > 0 ) {

for ( int i = 1; i <= upper; i++)

sum += i ;

}

System.out.println (“the sum of “+upper+” is “ +sum);

}

private int upper;

}

public class ThreadTester

{

public static void main (String[] args){

if (args.length > 0){

Summation thrd = new Summation (Integer.parseInt (args[0]));

thrd.start(); //create thread//

}

else

System.err.println("usage: Summation <integer value>");

}

}

- start method

- Allocates memory and initializes a new thread in JVM

- Calls run method, making the thread eligible to be run by JVM

Multiple threads

public class ThreadTester {

Public static void main ( String [ ] args ) {

PrintThread thread1 = new PrintThread ( “thread1” );

PrintThread thread2 = new PrintThread ( “thread2” );

PrintThread thread3 = new PrintThread ( “thread3” );

System.err.println ("starting threads");

thread1.start( ); thread2.start( ); thread3.start( );

System.err.println (“threads started, main ends \n” threads” );

}

}

class PrintThread extends Thread {

private int sleepTime;

public PrintThread ( String name ){

super (name);

sleepTime = (int) (math.random() * 5001 );

}

public void run ( ) {

try {System.err.println (getName() + “going to sleep for ” + sleepTime);

Threads.sleep (sleepTime);

};

System.err.println (getName() + “done sleeping ” );

}

객체를 여러개 만듦.

Java Multithreaded Program (Runnable Interface 구현상속)

Java Multithreaded Program (Cont.)

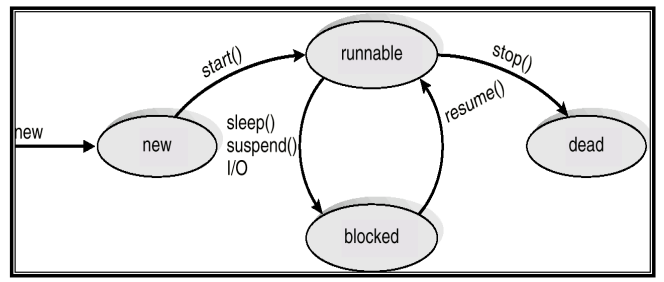

Java Thread States

- Blocked : waiting (wait) -> notify, notifyall, T/O interrupt

- sleeping (sleep) -> sleep interval expire interupt

- blocked (io, synch) -> i/o complete, lock acqusition interrupt

- Runnable: ready -> running : thread dispatch

- running -> ready : quantum expire, yield

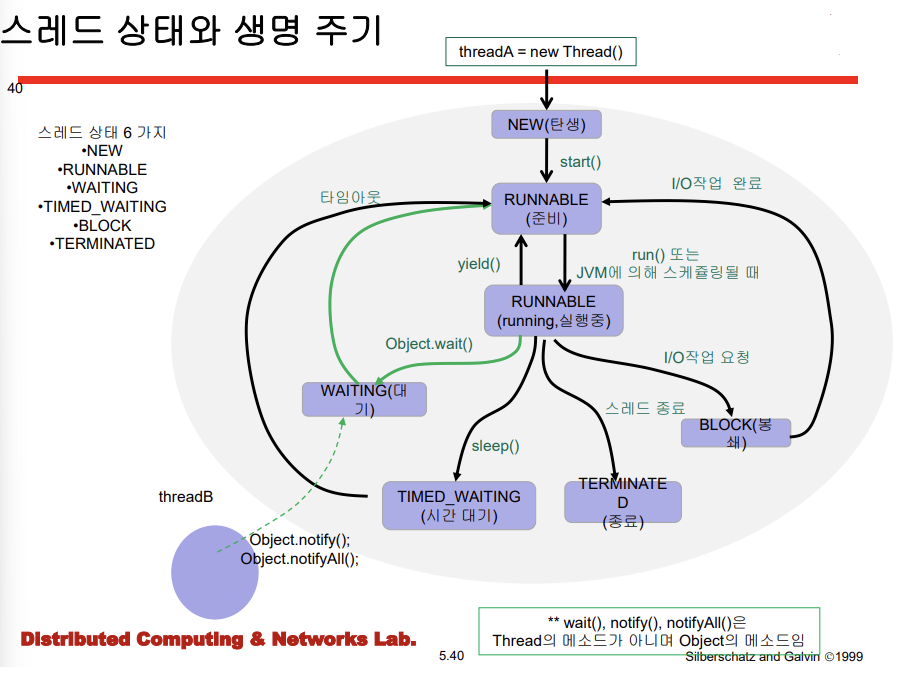

스레드 상태와 생명 주기

- RUNNABLE

- 준비(process state 중 ready state)

- 실행 중(process state 중 running state)

- WAITING과 BLOCK이 구분되어 있음

- process에서는 I/O든 interrupt 든 wating으로 묶었음

- 다른 thread가 notify 메소드를 호출하여 waiting thread를 깨운다.

JVM & Host OS

- JVM is on top of a host OS

- Allows the JVM to hide the implementation details of underlying OS

- Provide a consistent, abstract environment that allows java programs to operate on any platform that supports a JVM

- JAVA spec. does not specify how java threads are to be mapped to OS

- Windows 95/98/NT/2000 use one-to-one model - each java thread for a JVM maps to a kernel thread

- Solaris 2 implements JVM as many-to-one model

- With Solaris 2.6, JVM was implemented using many-to-many model

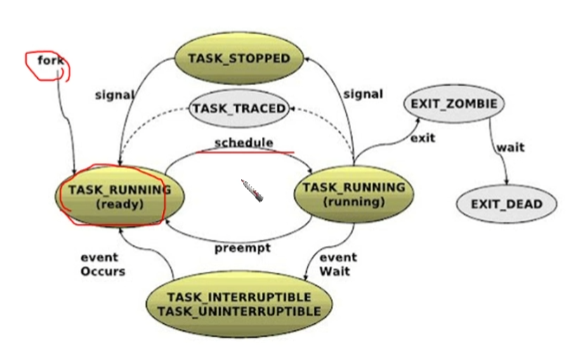

Linux Thread States

running 상태에서 일정 시간 이상 머물게 되면 강제로 ready state로 보낸다.(뒷장에서 배울 preemption)

Implicit Threading

-

스레드의 관리를 컴파일러나 Run-time library가 관리를 하고, 어플리케이션 개발자들이 이런 걸 신경 쓰지 않게 하기 위함

- Growing in popularity as numbers of threads increase, program correctness more difficult with explicit threads

- 버그를 찾는 것이 어려워짐

- explicit threads: 프로그램을 작성한 사용자가 직접 실행한 thread

- Creation and management of threads done by compilers and run-time libraries rather than programmers

- -> implicit thread

- 개발자들이 따로 명시하지 않아도 사실 스레드가 진행되고 있어서 Implicit Threading임

- Three methods explored (아래에서 이에 대한 자세한 설명이 나오는데 이는 시험 X)

- Thread Pools

- OpenMP

- Grand Central Dispatch

- Other methods include Microsoft Threading Building Blocks (TBB), java.util.concurrent package

Thread Pools

- Create a number of threads in a pool where they await work - thread 그릇은 사전에 만들어 놓는 것

- Advantages:

- Usually slightly faster to service a request with an existing thread than create a new thread

- Allows the number of threads in the application(s) to be bound to the size of the pool

- Separating

task to be performed from mechanics of creating taskallows different strategies for running task- i.e.Tasks could be scheduled to run periodically

- Windows API supports thread pools:

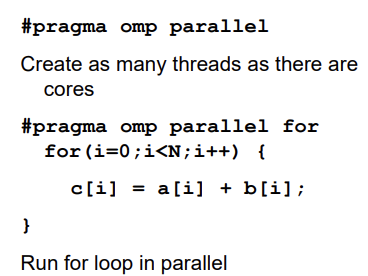

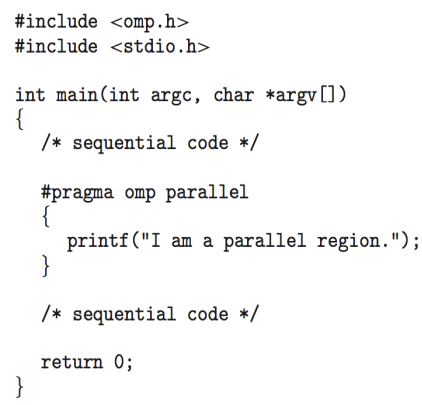

OpenMP

- Set of compiler directives and an API for C, C++, FORTRAN

- Provides support for parallel programming in shared-memory environments

- Identifies parallel regions – blocks of code that can run in parallel

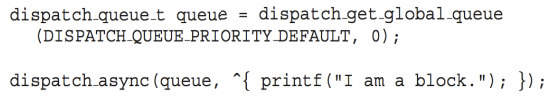

Grand Central Dispatch

- Apple technology for Mac OS X and iOS operating systems

- Extensions to C, C++ languages, API, and run-time library

- Allows identification of parallel sections

- Manages most of the details of threading

- Block is in

“^{ }”- ex)

ˆ{ printf("I am a block"); }

- ex)

- Blocks placed in dispatch queue

- Assigned to available thread in thread pool when removed from queue

- Two types of dispatch queues:

- serial – blocks removed in FIFO order, queue is per process, called main queue

- Programmers can create additional serial queues within program

- concurrent – removed in FIFO order but several may be removed at a time

- serial – blocks removed in FIFO order, queue is per process, called main queue

Threading Issues

- Semantics of fork() and exec() system calls.

- 둘이 같은가 다른가?

- Thread cancellation.

- Asynchronous or deferred

- Signal handling

- Synchronous and asynchronous

- Thread pools

- Thread specific data

- Create Facility needed for data private to thread

- 데이터지만 스레드 간에 공유하지 않는 데이터의 issue

- Scheduler activations

fork() and exec() system calls

- In a multithreaded program, the semantics of fork, exec change

- Does fork() duplicate only the calling thread or all threads?

- fork는 프로세스를 만들어내는 것인데 fork를 하는 경우 그 안의 존재하는 모든 thread를 복제해야 하는가?

- If one thread in a program calls fork, does the new process duplicate all threads or is the new process single-threaded ?

- Unix has 2 versions

- One that duplicates all threads

- Another that duplicates only the thread that invoked fork

- Exec() usually works as normal – replace the running process including all threads (process 동작과 거의 동일)

- If a thread invokes the exec system call, the program specified in the parameter to exec will replace the entire process (all threads)

- 코드 자체는 공유되기 때문에

- exec()을 호출하냐 안하냐에 따라 달라짐

- 호출하면 주소공간이 바뀌기 때문에 어차피 바꿀건데 뭐하러 모든 스레드를 복사함?

- 호출하지 않으면 그냥 그대로 모든 스레드를 복제하는 것이다.

Thread cancellation (1/2)

-

abort() 느낌

- Task of terminating a thread before it has completed

- Ex) multiple threads are concurrently searching through a DB and one thread returns the result.

- A thread that is to be cancelled is referred to as target thread

- 예를 들어 DB 검색을 목적으로 멀티스레드를 만들었다면,, DB의 여러 섹션을 스레드마다 검색. => 한 스레드가 원하는 데이터를 찾았다!! -> 나머지 스레드들을 종료시켜야 한다. ==> 아! 이래서 필요하구나!!

- Two general approaches:

- Asynchronous cancellation -> one thread immediately terminates the target thread

- 자원 반납할 여유도 없이 terminate, 왜 죽는 지도 모르고 즉사

- Deferred cancellation -> the target thread can periodically check if it should terminate, allowing the target thread an opportunity to terminate itself in an orderly fashion

- Flag checking

- 자원 반납(뒷정리)을 스스로하게 될 여유를 줌.

- ex) cleanup routine

- target thread가 자신이 주기적으로 내가 죽어야 되나 말아야 되나를 체크

- 죽어야 된다고 판단 시 스스로 종료

- Asynchronous cancellation -> one thread immediately terminates the target thread

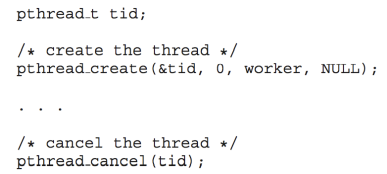

- Pthread code to create and cancel a thread:

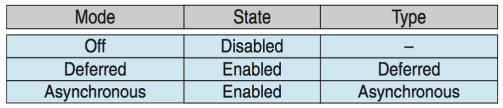

- Invoking thread cancellation requests cancellation, but actual cancellation depends on thread state

- If thread has cancellation disabled, cancellation remains pending until thread enables it

- Default type is deferred (연기된, 뒷정리를 하고 죽는)

- Cancellation only occurs when thread reaches cancellation point

- 반드시 cancellation point가 정의 되어 있어야 함.

- I.e. pthread_testcancel()

- Then cleanup handler is invoked

- Cancellation only occurs when thread reaches cancellation point

-

On Linux systems, thread cancellation is handled through signals

- How about the resources that are allocated to the thread?

- If a thread was cancelled while in the middle of updating data it is sharing with other threads?

- With asynchronous cancellation, OS may not free a system-wide resource

- With synchronous cancellation, cancellation points should be defined

Signal handling (1/3)

-

A signal is used to notify a process that a particular event has occurred

- A signal is generated by the occurrence of a particular event

- A generated signal is delivered to a process

-

Once delivered, the signal must be handled by one of two signal handlers:

- default

- user-defined

-

Delivery of signal can be done either synchronously or asynchronously

- Synch: illegal memory access, division by zero

- signal is delivered to the same process that performed the operation causing the signal

- Asynch: generated by an event external to a running process

- Terminating a process ctrl + c

- Typically asynchronous signals are sent to another process

- Synch: illegal memory access, division by zero

Signal handling (2/3)

- Every signal may be handled by

- A default signal handler

- A user-defined handler (우선 순위가 더 높음)

- Every signal has a default signal handler that is run by the kernel

- The default signal handler is overridden by a user-defined signal handler

- user defined signal handler가 있다면 ! default 무시 -> user defined 실행

- Handling signals in single-threaded programs is straightforward

- Signals are always delivered to a process

Signal handling (3/3)

- Where then should a signal be delivered for multi-threaded?

- Deliver the signal to the thread to which the signal applies

- Synchronous signal

- 직접적 연결이 있는 thread에 signal 전달하는

- Deliver the signal to every thread in the process

- Asynchronous signal such as c

- Deliver the signal to certain threads in the process

- Some unix allows a thread to specify which signals it will accept or and which it will block

- Delivered only to the first thread found in a process that is not blocking the signal

- Assign a specific thread to receive all signals for the process

- Solaris 2

- Deliver the signal to the thread to which the signal applies

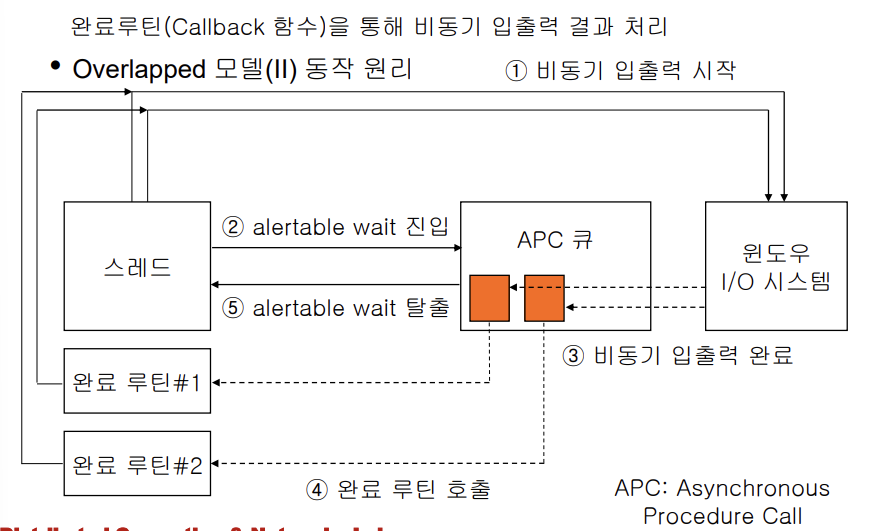

- Window 2000 : asynchronous procedure call (APC)

- Allows a user thread to specify a function that is to be called when the user thread receives notification of a particular event

- Similar to asynchronous signal

Overlapped 모델(II)

Thread pools

- Scenario of multithreading a web server

- Creating a separate thread is superior to separate process

- But, it also has problems

- Time required to create the thread

- 프로세스보다(fork()) 시간이 적게 걸리지만 스레드 생성에 시간이 오래 걸림

- Unlimited thread creation could exhaust system resources

- 클라이언트의 요구가 몇 개가 들어올 지 모름

- Time required to create the thread

- Create a number of threads at process startup and place them in pool

- Advantages:

- Usually slightly faster to service a request with an existing thread than create a new thread

- Allows the number of threads in the application(s) to be bound to the size of the pool

- 스레드가 할 일을 다 하면 죽는 것이 아니라 다시 thread pool로 돌아가는 것

Thread-specific data (Thread-Local Storage)

-

멀티스레드에서 한 프로세스 내의 모든 스레드는 메모리를 공유한다!! 하지만, 각 스레드는 자기 고유의 데이터가 필요하다.

- Threads belonging to a process share the data of the process

- Benefits of multithreaded programming

- However, 때때로, each thread might need its own copy of certain data

- Allows each thread to have its own copy of data

- Thread-local storage (TLS) allows each thread to have its own copy of data

- Most thread libraries provide the thread-specific data

- Win32, Pthreads, Java

- Useful when you do not have control over the thread creation process (i.e., when using a thread pool)

- Different from local variables

- Local variables visible only during single function invocation

- TLS visible across function invocations (함수 호출 ~ 리턴까지); 함수가 종료해도 남아있음

- Similar to static data in C

- TLS is unique to each thread

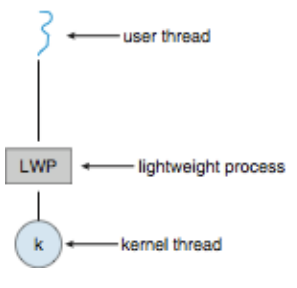

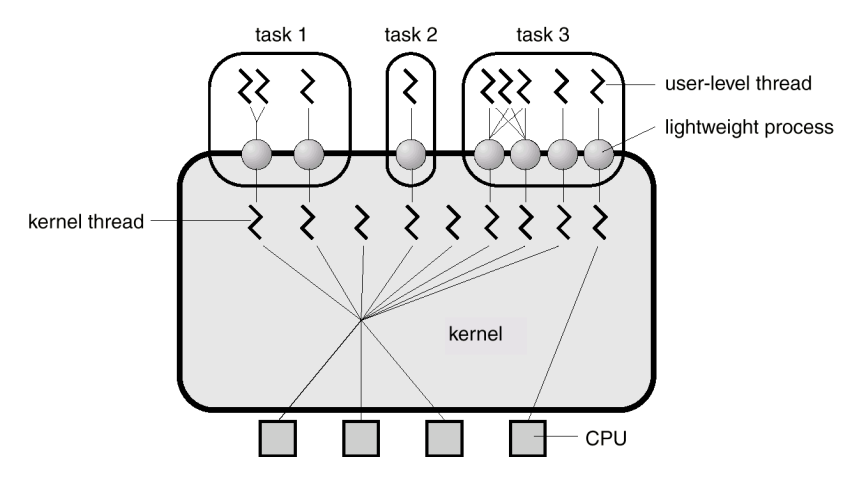

Scheduler Activations(시험)

- Both M:M and Two-level models require communication to maintain the appropriate number of kernel threads allocated to the application

- Typically use an intermediate(중간) data structure between user and kernel threads - lightweight process (LWP)

- Appears to be a virtual processor on which process can schedule user thread to run

- Each LWP attached to kernel thread

- How many LWPs to create?

- Scheduler activations provide upcalls - a communication mechanism from the kernel to the thread library

- upcall: 커널에서 스레드 라이브러리에 전달하는 통신 메커니즘

- 몇 개 필요한지 물어봐서 그 갯수에 해당하는 커널 스레드를 만들거나 회수해 준다.

- upcall: 커널에서 스레드 라이브러리에 전달하는 통신 메커니즘

- This communication allows an application to maintain the correct number kernel threads

- 즉, 커널 스레드 개수를 동적으로 control

- 로드가 적을 때는 개수를 적게, 로드가 많을 때는 개수가 많게

Threads Support in Solaris 2

- Solaris 2 is a version of UNIX with support for threads at the kernel and user levels, symmetric multiprocessing, and real-time scheduling.

- Implements many-to-many model Implements the Pthread API, UI threads

- User level threads with a library containing APIs for thread creation and management

- Developers opt for Pthread library

Threads Support in Solaris 2

- LWP – intermediate level between user-level threads and kernel-level threads.

- Each process contains at least one LWP

- Thread library multiplexes user-level threads on the pool of LWPs for the process

- Only user-level threads currently connected to an LWP accomplish work

- The rest one are either blocked or waiting for an LWP on which they can run

- Kernel-level thread

- Each LWP has an kernel-level thread

- Some kernel-level threads run on the kernel’s behalf and have no associated LWP (a thread to service disk request)

- The only objects scheduled for CPU

- User-level threads may be either bound or unbound

- A bound thread

- permanently attached to an LWP

- Runs on the LWP, and by request the LWP can be dedicated to a single processor

- Binding a thread is useful in situations that require quick response time, such as real-time app.

- An unbound thread (default)

- is not permanently attached to an LWP

- All unbound threads in an application are multiplexed onto the pool of available LWPS for the application

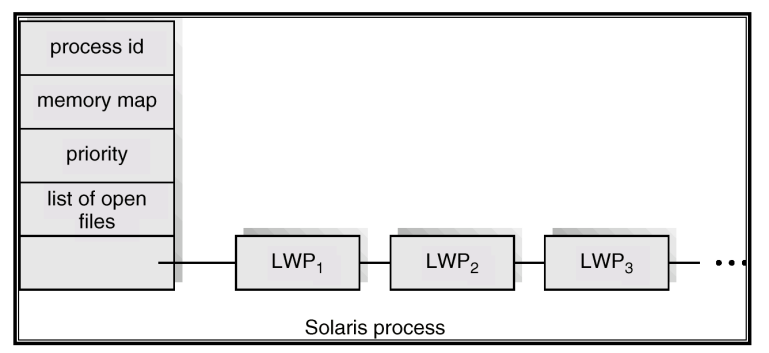

Solaris 2 Threads

Data structures to implement threads on Solaris 2

- Kernel thread:

- small data structure and a stack

- Copy of kernel registers

- A pointer to LWP to which it is attached

- A priority

- LWP: kernel data structures

- register set for the user-level thread it is running

- accounting and memory information

- switching between LWPs is relatively slow.

- User-level thread: exists in user space

- Thread ID, register set including PC and SP

- Stack, priority for scheduling

- no kernel involvement means fast switching.

Solaris 2 Process

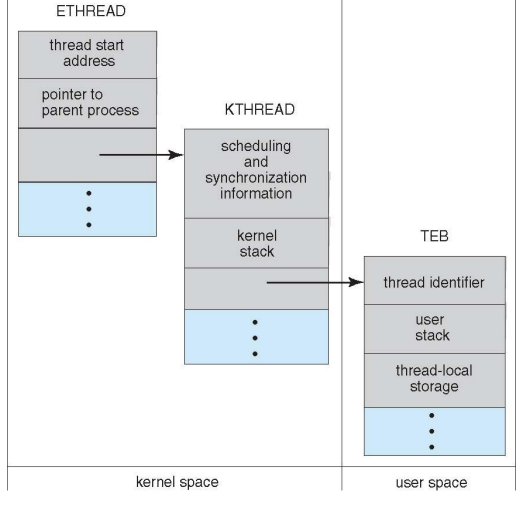

Windows Threads

- Windows implements the Windows API – primary API for Win 98, Win NT, Win 2000, Win XP, and Win 7

- Implements the one-to-one mapping, kernel-level

- Each user-level thread maps to an associated kernel thread

- Each thread contains

- a thread id

- register set representing state of processor

- separate user and kernel stacks for when thread runs in user mode or kernel mode

- private data storage area used by run-time library and dynamic link libraries (DLLs)

- The register set, stacks, and private storage area are known as the context of the threads

- The primary data structures of a thread include:

- ETHREAD (executive thread block) – includes pointer to process to which thread belongs and to KTHREAD, in kernel space

- KTHREAD (kernel thread block) – scheduling and synchronization info, kernel-mode stack, pointer to TEB, in kernel space

- TEB (thread environment block) - thread id, user-mode stack, thread-local storage, in user space

Windows XP Threads Data Structures

윈도우

프로세스와 스레드 (1/2)

- 용어

- 프로세스(process)

- 메모리를 비롯한 각종 리소스를 담고 있는 컨테이너(container)로서 정적인 개념

- 스레드(thread)

- 실제 CPU 시간을 할당받아 수행되는 실행 단위로서 동적인 개념

- 주 스레드(primary thread)

- main() 또는 WinMain() 함수에서 시작되는 스레드로, 프로세스가 시작할 때 생성

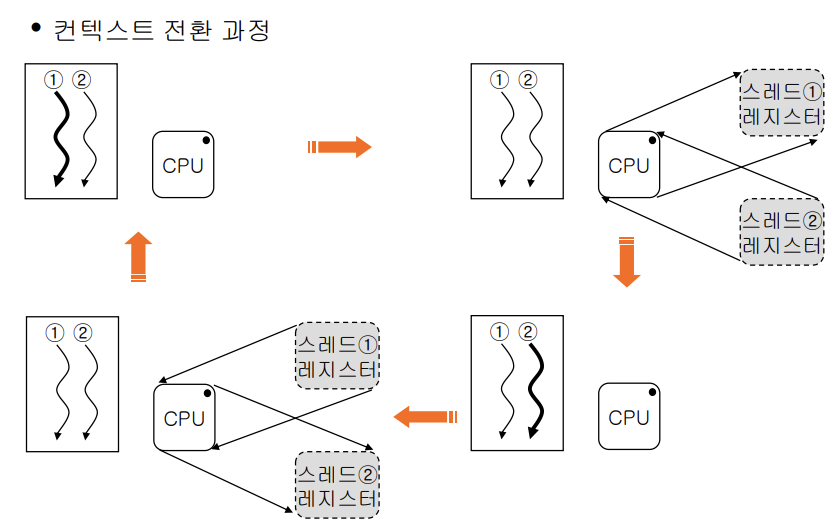

- 컨텍스트 전환(context switch)

- CPU와 운영체제의 협동으로 이루어지는 스레드 실행 상태의 저장과 복원 작업

- 프로세스(process)

프로세스와 스레드 (2/2)

Process와 Thread

- thread마다 stack에 대한 정보가 복제된다.

- 스택만 별도로 복제, 다른 건 공유

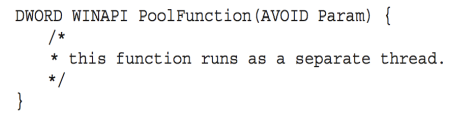

스레드 생성과 종료 (1/6)

- 스레드 생성에 필요한 요소

- 스레드 함수(thread function)의 시작 주소

- 스레드 함수 실행시 사용할 스택 영역의 크기

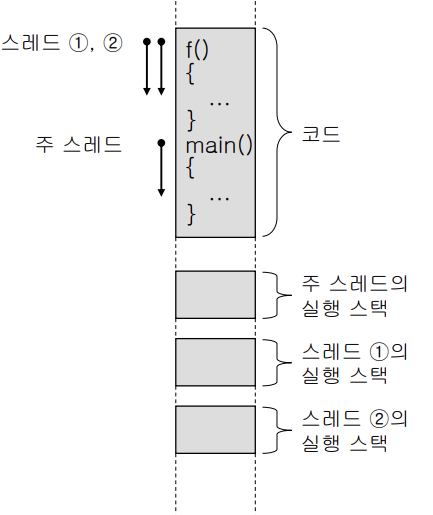

스레드 생성과 종료 (2/6)

- 프로세스의 주소 공간

- 두 개의 함수

- 세 개의 스레드

- main 함수의 thread - primary thread

스레드 생성과 종료 (3/6)

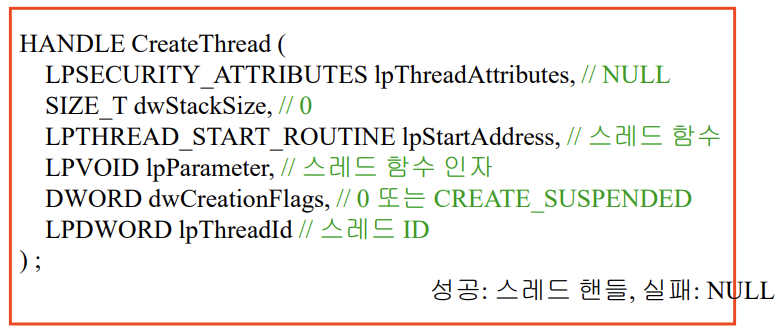

- CreateThread() 함수 (API)

- 스레드를 생성한 후 스레드 핸들(thread handle)을 리턴

스레드 생성과 종료 (4/6)

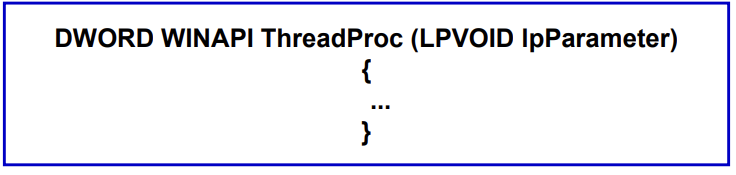

- 스레드 함수 정의

스레드 생성과 종료 (5/6)

-

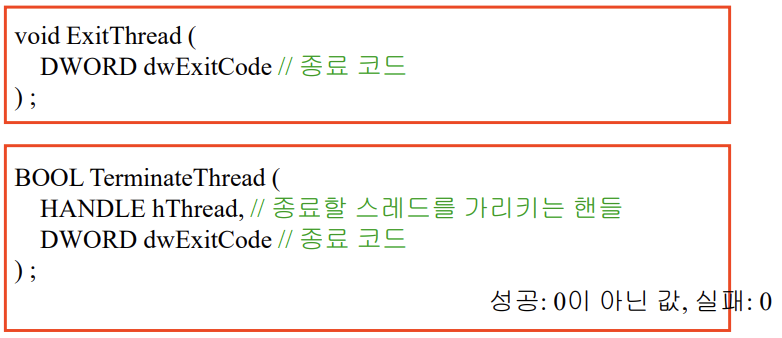

스레드 종료 방법 (4가지 경우)

① 스레드 함수가 리턴

② 스레드 함수 내에서 ExitThread() 함수를 호출

- exit()

③ TerminateThread() 함수를 호출

- kill(), abort()

④ 주 스레드가 종료하면 모든 스레드가 종료

스레드 생성과 종료 (6/6)

- 스레드 종료 함수

#include <windows.h>

struct Point3D

{

int x, y, z;

};

DWORD WINAPI MyThread(LPVOID arg)

{

Point3D *pt = (Point3D *)arg;

while(1){

printf("Running another thread: %d, %d, %d\n",

pt->x, pt->y, pt->z);

Sleep(1000);

}

return 0;

}

int main()

{

// 첫 번째 스레드 생성

Point3D pt1 = {10, 20, 30};

DWORD ThreadId1;

HANDLE hThread1 = CreateThread(NULL, 0, MyThread,

(LPVOID)&pt1, 0, &ThreadId1);

if(hThread1 == NULL) return -1;

CloseHandle(hThread1);

// 두 번째 스레드 생성

Point3D pt2 = {40, 50, 60};

DWORD ThreadId2;

HANDLE hThread2 = CreateThread(NULL, 0, MyThread,

(LPVOID)&pt2, 0, &ThreadId2);

if(hThread2 == NULL) return -1;

CloseHandle(hThread2);

while(1){ //무한루프

printf("Running primary thread...\n");

Sleep(1000);

}

return 0;

}

main thread, T1, T2 총 세 thread가 실행 중임

Linux Threads

thread냐 process냐는 공유정보가 무엇인가에 따라 달라진다.

- Linux refers to them as tasks rather than threads

struct task_structpoints to process data structures (shared or unique)-

Linux provides a fork system call with the traditional functionality of duplicating a process

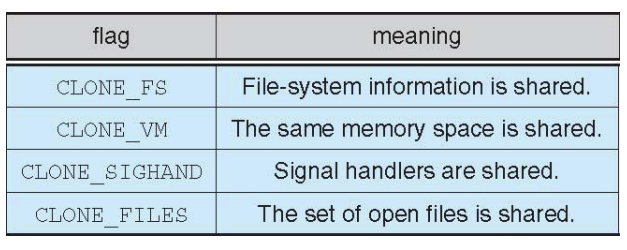

- Thread creation is done through clone() system call.

- clone behaves much like fork, except that instead of creating a copy of the calling process, it creates a separate process that shares the address space of the calling process

- A cloned task behaves like a separate thread

- clone() allows a child task to share the address space of the parent task (process)

- In case of clone, Sharing of address space is allowed because of

- Rather than storing kernel data for each process’s data structure, it stores pointers to data structures of other process

- Linux refers to both of process and thread as tasks

- Aside from the cloned process, Linux does not support multithreading, separate data structures.

- Pthread implementations are available for user-level multithreading

Linux Threads

fork()andclone()system calls- Doesn’t distinguish between process and thread

- Uses term task rather than thread

clone()takes options to determine sharing on process createstruct task_structpoints to process data structures (shared or unique)

댓글남기기